Project 2025: Cheat Sheet for Tonight’s Debate.

Posted on | September 9, 2024 | Comments Off on Project 2025: Cheat Sheet for Tonight’s Debate.

Mike Magee

Donald Trump says he’s never heard of Project 2025. Denials aside, expect the term Project 2025 to come up multiple times in this evening’s debate. But you are less likely to hear the name Kevin Roberts. He’s been president of the Heritage Foundation, and the voice for Project 2025 until it became too hot to handle and Trump publicly threw him and the controversial 900 page document overboard.

But few loyalists deny that both Roberts and the Project’s policy objectives will be front and center if a 2nd Trump presidency were to prevail. Like Leo Leonard, the Chairman of the Board of the Federalist Society that engineered a conservative takeover of the Supreme Court, the Grand Old Party is rich in these shadowy figures. They are content to wield power behind the scenes, and work doggedly, sometimes for decades, to achieve their policy objectives.

As a result, the public is largely unaware of the full story. For example, you are probably unaware that Project 2025’s predecessor was the 7th edition of the Heritage Foundation’s “Mandate for Leadership” released in the lead up to the 2016 election. 64% of its policy proposals were embraced by Trump in the first year of his presidency.

And you may be surprised to learn that 8 out 10 of the contributors to Project 2025 were members of Triump’s administration – including conservative fan favorites like Homeland Security Secretary Ken Cucinelli, adviser to the president Peter Navarro, Defense Secretary Chris Miller, and OMB head Russel Voight.

Off course, when you are dealing with a 900 page tome, its not surprising that most Americans get lost in the weeds and lose interest. So financial guru, Steve Rattner, has helped out by directing you to a few pages:

Page 326: Title I funding is a rural and red state favorites that has to go. It is a major funder of public schools in low income areas, and has supported the growth of charter schools. Nationwide projections suggested a 6% loss of teachers effecting nearly 3 million students. In some states like Louisiana, the projections are more dire with 12% of teachers left unemployed.

Page 337: Look for monthly payments for student debt for those who never gained their diplomas to rise from $78 dollars per month to $308 dollars per month. Biden’s proposed Student Loan Forgiveness would be a goner.

Page 365 & 440: Few are aware that the Inflation Reduction Act is mainly intended to aid our nation’s shift to renewable clean energy. And most agree it has been very successful, and has had a side benefit of helping to curb inflation. Other Biden legislation is targeted for destruction as well including the government’s ability to negotiate drug prices, and successful implementation of the Bipartisan Infrastructure Law.

Page 458: 63% of all abortions now are first trimester medication abortions using mifepristone. As a result, the need for surgical abortions has declined from 1.5 million to just .4 million in the past year. Project 2025 asks that the FDA ban the use of mifepristone.

Page 468: If you’re low income and live in Wisconsin, hold onto you hat. This proposal places a lifetime cap on enrollment in the Medicaid program. This would result in 41% of Wisconsin enrollees losing their Medicaid benefits. Nationwide, 19 million of the 95 million Medicaid enrollees (that’s 5% of our population) would lose their health insurance coverage.

Page 482: Get ready for “familial in-home child care” because Project 2025 has Head Start, another popular program especially in rural counties and red states, up for the chopping block.

Page 524: Civil service employees enjoy a range of protections to prevent politically motivated threats to their ongoing employment. Project 2025 has identified 50,000 civil servants for reclassification as political appointees. This would greatly expand Trump’s executive power were he to be elected, essentially creating the “deep state” he’s been warning us all about.

Page 696: Here you’ll find the promise to condense seven tax brackets down to two – 15% and 30%. That would insure lower tax rates for the rich, and higher taxes for our lower-income citizens. The richy rich well remember Trump’s cutting their corporate taxes from 35% to just 21%. Project 2025 says Trump will double down and lower corporate rates further.

And of course there’s more. And you won’t hear Trump quoting chapter and verse of Project 2025 tonight. So what’s the point? It’s this. Regardless of the debate performance of these two candidates this evening, keep in mind that whether it be the Federalist Society or the Heritage Foundation, they have been working for at least two decades to achieve a theocratic autocracy – and they’ve gotten pretty damn close. They can smell victory.

So it is not enough to simply reject Trump. We can not limp to the finish line. To neutralize the Kevin Roberts and the Leo Leonard’s of our world, Republicans up and down the ballot (even the good ones) must be soundly rejected. The message must clear to reset our democracy. If you threaten our freedoms, as you have with women’s reproductive rights, you will never again successfully run for elective office in America.

Tags: 2024 election > conservative supreme court > federalist society > heritage foundation > kevin roberts > Leonard Leo > Project 2025 > republican party > steven rattner > trump

A Healthy Educated Labor Force Is The Key To Economic Prosperity.

Posted on | September 2, 2024 | Comments Off on A Healthy Educated Labor Force Is The Key To Economic Prosperity.

Mike Magee

Another Labor Day has come and gone. But support for the Labor Movement, and the focus on housing, health services, and economic mobility are front and center for the Harris-Walz ticket as Tuesday, November 5th approaches.

As Dora Costa PhD, Professor of Economics at UCLA puts it:

“Health improvements were not a precondition for modern economic growth. The gains to health are largest when the economy has moved from ‘brawn’ to ‘brains’ because this is when the wage returns to education are high, leading the healthy to obtain more education. More education may improve use of health knowledge, producing a virtuous cycle.”

Information technology is clearly making its mark as well. As one report put it, “Recent advances in artificial intelligence and robotics have generated a robust debate about the future of work. An analogous debate occurred in the late nineteenth century when mechanization first transformed manufacturing.”

The National Bureau of Economic Research (NBER) recently stated, “The story of nineteenth century development in the United States is one of dynamic tension between extensive growth as the country was settled by more people, bringing more land and resources into production, and the intensive growth from enhancing the productivity of specific locations.”

At the turn of the 20th century, industrialization and urbanization were mutually reinforcing trends. The first industrial revolution was “predominantly rural” with 83% of the 1800 labor force involved with agriculture, producing goods for personal, and at times local market consumption. The few products that were exported far and wide at the time – cotton and tobacco – relied on slave labor to be profitable.

The U.S. population was scarcely 5 million in 1800, occupying 860,000 square miles – that’s roughly 6 humans per square mile. Concentrations of humans were few and far between in this vast new world. In 1800, only 33 communities had populations of 2,500 or more individuals, representing 6% of our total population at the time. Transportation into and out of these centers mainly utilized waterways including the Eastern seaboard and internal waterways as much as possible. This was in recognition that roads were primitive and shipping goods by horse and wagon was slow (a horse could generally travel 25 miles a day), and expensive (wagon shipment in 1816 added several days and cost 70 cents per ton mile.)

At least as important was the creation of a national rail network that had begun in 1840. This transformed market networks, increasing both supply and demand. The presence of rail transport decreased the cost of shipping by 80% seemingly overnight, and incentivized urbanization.

But by 1900, the U.S. labor force was only 40% agricultural. Four in 10 Americans now lived in cities with 2,500 or more inhabitants, and 25% of Americans lived in the growing nation’s 100 largest cities. The rivers and streams drove water wheels and later turbine engines. But this dependency lessened with the invention of the steam engine. Coal and wood powered burners could then create steam (multiplying the power of water several times) to drive engines. The choices for population centers now had widened.

Within a short period of time, self-reliant home manufacturing couldn’t compete with urban “machine labor.” Those machines were now powered not by waterpower but “inanimate power” (steam and eventually electricity). Mechanized factories were filled with newly arrived immigrants and freed slaves engaged in the “Great Migration” northward. As numbers of factory workforce grew, so did specialization of tasks and occupation titles. The net effect was quicker production (7 times quicker than none-machine labor).

Even before the information revolution, the internet, telemedicine, and pandemic driven nesting, all of these 20th century trends had begun to flatten. The linkages between transportation, urbanization, and market supply were being delinked. Why?

According to the experts, “Over the twentieth century new forces emerged that decoupled manufacturing and cities. The spread of automobiles, trucks, and good roads, the adoption of electrical power, and the mechanization of farming are thought to have encouraged the decentralization of manufacturing activity.”

What can we learn from all this in 2024?

First, innovation and technology stoke change, and nothing is permanent.

Second, markets shape human preferences, and vice versa.

Third, in the end, equal access to education and health services is critical to long term economic prosperity. When it comes to the human species, a healthy well-educated workforce fuels innovation, growth, and opportunity for all. Happy Labor Day!

Tags: Dora Costa > economics > Harris-Walz > health services > Industrial Revolution > NBER > prosperity > technology > transportation

What Are the Harris-Walz Health Policy Team Reading?

Posted on | August 26, 2024 | 4 Comments

Mike Magee

Clearly the Harris-Walz ticket has been doing their homework. Last week, this book was spotted on one prominent thought-leader’s pile: “Human Evolutionary Demography.” It’s a 780-page academic Tour de force led by veteran scientist Oskar Burger, leader of the Max Planck Institute for Demographic Research and the Laboratory of Evolutionary Biodemography.

That’s the Institute founded in 1917 in Berlin. Their first director? Albert Einstein. These days, its researchers work (in an age of “alternate facts”) to separate justified belief from opinion. Their major focus is on “categories of thought, proof, and experience” at the crossroads of “science and ambient cultures.”

This is the field of Human Evolutionary Demography, a blending of natural science with social science. Demographers study populations and explore how humans behave, organize and thrive focusing heavily on birth, migration, and aging.

This has been a year of just that in American politics. First, the fallout of the Dobbs decision caught Republicans with their electoral pants down in reproductive freedom referendums in Kansas, Michigan, Kentucky and Vermont. Southern migration of Democrats to former red states like Michigan, Arizona, Georgia, and North Carolina have turned them various shades of purple. And this summer, octogenarian candidates from both parties have been all the rage, literally.

Up until July 21, 2024. the race for the Presidency was between two aging candidates with visible mental and physical disabilities. The victor was destined to a term of office that would extend into his 80’s.

The emergence of Kamala Harris as the Democratic nominee was a reflection of the electorates growing discomfort with turning a blind eye to the realities of aging. It also suggested that Americans, especially Gen X’ers, have grown tired of Boomer dominance in the lives of an increasingly multi-cultural America – tired as well of growing income disparity, attacks on reproductive freedom, and declining life expectancy in America.

But why the sudden interest in “Human Evolutionary Demography?” The answer lies in the numbers. Back in 2012 Oskar Burger studied Swedes and noted that in 1800 their life expectancy was 32 years. They gained an additional 20 years in the century that followed, and 30 more years by 2000.

What stumped Burger was not the gains over these two hundred years. Instead he focused on the question, “Why did it take the human race so long to progress?” The bottom line is this, we left chimpanzees behind in the evolutionary dust some 6.6 million years ago. We limped along, not faring very well, for all but the last 200 years. In the past century, a moment in time spanning just 4 of our historic 8000 plus human generations, we took off. This period coincided with rapid scientific and technologic advances, cleaner air and water, greater nutritional support, improved education and housing, expanded public health related governmental policy, and establishment of a safety net for our most vulnerable citizens.

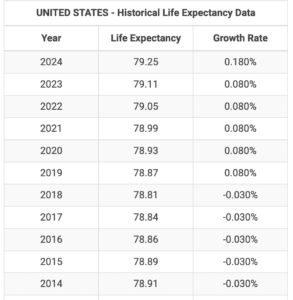

But in the past decade, growth in U.S. life expectancy has all but stalled. For the first time, we actually saw declines each year from 2014 to 2019. For the decade just past, the numbers improved by less than 1/2 of 1 %. When first studied, declines were blamed on losses in working age adults due to trauma, addiction, suicide or “deaths of despair.”

But recent studies reveal losses due to poor maternal/fetal care, especially in red states, and made worse by fallout of the Dobbs decision. A second complicator has been losses starting at age 65 from complications of cardiovascular disease and diabetes, made worse by obesity and poor health care follow-up.

This has led the Max Planck Institute to issue an alert to U.S. health experts: “Our findings suggest that the U.S. faces a ‘double jeopardy’ from both midlife and old-age mortality trends, with the latter being more severe.”

Women’s reproductive advocates say it’s really a “triple jeopardy” demanding grass roots advocacy focused on access today, and political victory up and down the ballot in November. In their words, “Today, and every day, we work to ensure that every patient who seeks sexual and reproductive health care can access it, and to build a just world that includes nationwide access to abortion for all — no matter what.”

If this is true, a careful read of “Human Evolutionary Demography” could direct a 3-prong health policy approach for the Harris-Walz campaign:

- Expanded safety net to address “deaths of despair.”

- Expansion of the ACC toward Universal Health Insurance to address burden of chronic diseases.

- Federal guarantees of reproductive freedom.

Tags: Albert Einstein > Biden > deaths of despair > Dobbs > Harris-Walz > Human Evolutionary Deography > maternal fetal health > Max Planck Institute > octogenarian candidates > trump

Dobbs’ Created “Moral Distress” – Country Responds “We’re Not Going Back!”

Posted on | August 7, 2024 | 2 Comments

Mike Magee

When Andrew Jameton, a Nursing Professor at the Department of Mental Health and Community Nursing at UCSFin 1984 published “Nursing Practice: The Ethical Issues”, the term “Moral Distress” was a novel term in clinical health care. It focused primarily on “care that they were expected to provide but ethically opposed.”

Over the past four decades, the definition has expanded and now encompasses the “inability to provide the care that one feels morally compelled to provide.” Beyond its’ impact on individual health professionals, it has growing health policy implications, explosively reverberating in the wake of the recent Dobbs decision.

There are approximately 1600 health care facilities nationwide that provide abortion care in the U.S. In the wake of the Dobbs decision overturning Roe v. Wade 14 states have near complete bans on all abortions and this reproductive care is severely restricted in an additional 11 states “with few or no exceptions for maternal health or life endangerment.”

The impact of these rulings has created not only a moral dilemma for health professionals, but also intense legal jeopardy. As one Tennessee Obstetrician recently put it, “There are weeks when I commit multiple felonies.”

There now exists a validated psychometric tool to measure the mental health impact of the Supreme Court’s actions called the Moral Distress Thermometer.(MDT) Experts recently surveyed 310 practicing clinicians involved in women’s reproductive health care, with a focus on comparing moral distress in those from restricted versus unrestricted states. What they reported in JAMA was that those in restricted vs. protected states had scores on the MDT that were more than double their comparators.

As one might expect, high scores on the MDT also correlate with higher rates of job burnout and attrition. This means lower rates of abortion care, but also a smaller maternal health workforce overall. This is in states that had already been lagging behind in access to obstetrical and reproductive health care in general. Clinical shortages are expected to rise in the months approaching an historic Presidential election.

Project 2025’s agenda for future women in America is much more expansive and aggressive than restriction of abortion alone. Trump’s denials aside, his selection of JD Vance as a running mate signals an intent to thoroughly engage in restriction of women’s reproductive rights in allegiance with a Supreme Court that appears equally committed.

With that in mind, the massive response to the Harris-Walz ticket appears to be offering a response that appears to be go well beyond simple “weird” labeling. Those words are a promise to each other, “We’re not going back.”

Tags: abortion > Dobbs > Harris > job burnout > moral distress > moral distress thermometer > reproductive freedom > roev. wade > trump > Vance > Walz

What’s In A Voice? Sam Altman Thinks He Knows.

Posted on | August 6, 2024 | Comments Off on What’s In A Voice? Sam Altman Thinks He Knows.

Mike Magee

When OpenAI decided to respond to clamoring customers demanding voice mediated interaction on Chat GPT, CEO Sam Altman went all in. That’s because he knew this was about more than competitive advantage or convenience. It was about relationships – deep, sturdy, loyal and committed relationships.

He likely was aware, as well, that the share of behavioral health in telemedicine mediated care had risen from 1% in 2019 to 33% by 2022. And that the pandemic had triggered an explosion of virtual mental health services. In a single year, between 2020 and 2021, psychologists offering both in-person and virtual sessions grew from 30% to 50%. Why/? The American Psychological Association suggests these oral communications are personal, confidential, efficient and effective. Or in one word – useful.

As Forbes reported in 2021, “Celebrity endorsements, like Olympic swimmer Michael Phelps’ campaign with virtual therapy startup Talkspace, started to chip away at the long standing stigma, while mindfulness apps like Calm offered meditation sessions at the click of a button. But it was the Covid-19 pandemic and collective psychological fallout that finally mainstreamed mental health.” As proof, they noted mental health start-up funding has increased more than five fold over the prior four years.

Altman was also tracking history. The first “mass medium” technology in the U.S. was voice activated – the radio. He also understood its’ growth trajectory a century ago. From a presence in 1% of households in 1923, it became a fixture in 3/4 of all US homes just 14 years later.

Altman also could see the writing on the wall. The up and coming generations, the ones that gently encouraged Biden to exit stage left, were both lonely and connected.

The most recent Nielson and Edison Research told him that the average adult in the U.S. now spends four hours a day consuming audio and their associated ads. 67% of that listening was on radios, 20% on podcasts, 10% on music streaming and 3% on satellite radio.

Post-pandemic, younger generations use of online audio had skyrocketed. In 2005, only 15% of young adults listened online. By 2023, it had reached 75%. And as their listening has risen, loneliness rates in young adults have declined from 38% in 2020 to 24% now.

A decade earlier, screenwriter Spike Jonze ventured into this territory when he wrote Her. Brilliantly cast, the film featured Joaquin Phoenix as lonely, introverted Theodore Twombly, reeling from an impending divorce. In desperation, he developed more than a relationship (a friendship really) with an empathetic reassuring female AI, voiced by actress Scarlett Johansson.

Scarlett’s performance was so convincing that it catapulted Her into contention for 5 academy awards winning Best Original Screenplay. It also apparently impressed Sam Altman, who, a decade later, approached Scarlett to be the “voice” of ChatGPT’s virtual lead. She declined seeing the potential downside of becoming a virtual creature. He subsequently identified a “Scarlett-like” voice actor and chose “Sky” as one of five voice choices to embody ChatGPT. Under threat of a massive intellectual property challenge, Altman recently “killed off” Sky, but the other four virtual companions (out of 400 auditioned) have survived.

As for content so that “what you say” is as well represented as “how you say it,” companies like Google have that covered. Their LLM (Large Language Model) product was trained on content from over 10 million websites, including HealthCommentary.org. Google engineer, Blaise Aguera y Arcas says “Artificial neural networks are making strides toward consciousness.”

Where this all ends up for the human race remains an open question. What is known is that the antidote for loneliness and isolation is relationships. But of what kind? Who knows? Oxford’s Evolutionary Psychologist Robin Dunbar believes he does.

Altman likely paid close attention to this review by Atlantic writer Sheon Han in 2021: “Robin Dunbar is best known for his namesake ‘Dunbar’s number,’ which he defines as the number of stable relationships people are cognitively able to maintain at once. (The proposed number is 150.) But after spending his decades-long career studying the complexities of friendship, he’s discovered many more numbers that shape our close relationships. For instance, Dunbar’s number turns out to be less like an absolute numerical threshold than a series of concentric circles, each standing for qualitatively different kinds of relationships.… All of these numbers (and many non-numeric insights about friendship) appear in his new book, Friends: Understanding the Power of Our Most Important Relationships.”

But what many experts now agree is that voice seems to unlock the key. Shorthand for Altman: Pick the right voice and you might just trigger the addition of 149 “friends” for each ChatGPT “buyer.”

Tags: behavioral health > Blaise Aguera y Arcas > Dunbar number > forbes > Friendship > LLM > lonliness > nielson and Edison research > radio > sam altman > Sheon Han > The Atlantic

“Weird” – Culture and Technology and Politics Collide.

Posted on | August 1, 2024 | 2 Comments

Mike Magee

“It’s not what you said, it’s how you said it,” was an admonition I heard literally hundreds of times growing up. The source was my mother who majored in English and Drama at Rutgers. She was especially tuned in, not only to words and meaning, but also to volume, cadence, speed, and tone.

She passed away in 1995, but her absence was never more apparent than it was this week for two reasons.

The first was the use of the word “weird,” by Minnesota Gov. Tim Walz on July 27th, at a rally in St. Cloud, Minnesota. The televised insult, delivered with clarity, apparently struck a cord, and quickly went viral. His targets, the Republican ticket visiting his state that day, felt the sting of his remarks, which were delivered with high performance quality, sarcasm, a twinkle in the eye, and a bit of historic context.

Walz began this way: “The fascists depend on us going back, but we’re not afraid of weird people. We’re a little bit creeped out, but we’re not afraid.”

With the audience warmed up, he continued.

“They went out, you know — because he’s a TV guy (referring to Trump’s choice of Vance for VP) — they go out and try to do this central casting: ‘Oh, we’ll get this guy who wrote a book, Hillbilly Elegy,’ you know, because all my hillbilly relatives went to Yale and became, you know, venture capitalists.” Embracing classic “beginning/middle/end” storytelling, he finished with, “The nation found out what we’ve all known in Minnesota: These guys are just weird.”

As one would expect, other leaders in the Democratic party, including VP hopeful’s, were quick to echo the word, with varying success. Thoughtful leaders like Chris Murphy (D-Conn.) added context to commentary while transitioning from words to general misbehavior. He said,“I wish I knew a better way to describe it, Your party’s obsession with drag shows is creepy. Your candidate’s idea to strip the vote away from people without kids is weird. The right wing book banning crusade is super odd. It’s just so far outside the mainstream.”

Over the next few days Opinion columnists weighed in as well. The Washington Post’s Monica Hesse, an English major herself, recounted a story she had read in the 2005 book, Freakonomics. It involved a 1946 radio episode of “The Adventures of Superman” called “The Clan of the Fiery Cross.” Meant to mimic the Ku Klux Klan, the master villain is caught offline describing in dramatic radio voice his own sheeted followers as “suckers” and “little nobodies.” Worse than that, following the episode’s airing, KKK members reportedly returned from work the following evening only to discover their “caped children” chasing and vanquishing their “sheeted siblings.” As reported in the book, one Klan member said, “I never felt so ridiculous in all my life! Suppose my own kid finds my Klan robe some day?”

My second reason this week for feeling my mother’s heightened presence takes us from the ridiculous to the sublime. On July 30th, ChatGPT maker OpenAI announced that the waiting cautionary period for release of their “conversational voice mode” had now come to an end. The new software, shuttered since May over criticism that AI product “hewed to sexist stereotypes about female assistants being flirty and compliant,” was now good to go.

The original controversy was ignited when Scarlett Johansson accused OpenAI CEO Sam Altman of “AI copying of her voice” after she declined to recreate her performance in the 2013 movie “Her” (a human to AI romance featuring the actress as the robot voice). The new (Scarlett-like) voice over, “Sky”, was one of five voices selected from an audition of 400 voice actors. Users now can select their own assistant. However, it’s down to four, since OpenAI chose to disengage from the controversy by killing off “Sky.”

Federal copyright law aside, the new offering’s significance is nothing short of ground breaking. As the Tech wizard Gerrit De Vynck reports, it delivers a “conversational voice mode, which can detect different tones of voice and respond to being interrupted, much like a human… the new voice features are built on OpenAI’s latest AI model, which directly processes audio without needing to convert it to text first. That allows the bot to listen to multiple voices at once and determine a person’s tone of voice, responding differently based on what it thinks the person’s emotions are.”

My mother couldn’t have envisioned culture and technology and politics colliding with such force three decades after her passing. But here we are. And as the Post’s Hesse notes, “When your whole political movement is based on a return to some ‘Pleasantville’ vision of American normalcy, ‘weird’ actually hurts.”

Tags: ChatGPT > chris murphy > freakonomics > gerrit de vynck > her > hillbilly elegy > JD Vance > minnesota > monica hesse > OpenAI > sam altman > scarlette jahansson > the clan of the fiery cross > tim walz > trump > wierd

“The Week Kamala Undressed The Actors” by Leo Tolstoy

Posted on | July 29, 2024 | Comments Off on “The Week Kamala Undressed The Actors” by Leo Tolstoy

Mike Magee

Over the past week, voters have been reintroduced to JD Vance, and have found the experience disquieting. In a FOX 2021 interview, he tied women’s worth to birthing, stating that “We should give miserable, childless lefties less control over our country and its kids…” and claimed that their choice of cats over babies had created a collection of disgruntled women politicians who “are miserable.”

That response calls to mind another character in history, Germaine de Staël. The French writer, who in 1803 met Napoleon at the height of his power and asked him, “Who is the greatest woman in the world?” His reply was immediate, “She who has borne the greatest number of children.” The question alone earned her an exile from Paris to Switzerland.

Alas, de Stael had the last laugh, decamping to the bucolic Le château de Coppet on Lake Geneva in Switzerland. She spent the next 10 years organizing his opposition, until fleeing to Austria, then St. Petersburg, while carefully avoiding Napoleon’s northward advancing troops. On Napoleon’s defeat, she returned to Paris in 1814.

Leo Tolstoy’s focus was on Napoleon as well with the publication of War and Peace in 1869. But he could have as easily been reflecting on our two MAGA leaders and their Project 2025 sycophants a century and half later. And yet, as with Germaine de Staël, they appear to have missed that Vice President Harris was born to lead, something Tolstoy would surely have highlighted.

In his brilliant Epilogue (p.1131), Tolstoy undresses Napoleon while pointing a contributory finger at an endless array of knowing followers. Written 155 years ago, his expose’ is poignant and devastating, and worth careful consideration from all those concerned with ethical leadership, governance, and compliance.

On The Rise To Power:

“(The launch requires that) …old customs and traditions are obliterated; step by step a group of a new size is produced, along with new customs and traditions, and that man is prepared who is to stand at the head…A man (like Trump) without conviction, without customs, without traditions, without a name (like Vance)…moves among all the parties stirring up hatreds, and, without attaching himself to any of them, is borne up to a conspicuous place.”

Early Success:

“The ignorance of his associates, the weakness and insignificance of his opponents, the sincerity of his lies, and the brilliant and self-confident limitedness of this man moved him to the head…the reluctance of his adversaries to fight his childish boldness and self-confidence win him…glory…The disgrace he falls into…turns to his advantage…the very ones who can destroy his glory, do not, for various diplomatic considerations…”

Fawning and Bowing to Power:

“All people despite their former horror and loathing for his crimes, now recognize his power, the title he has given himself, and the ideal of greatness and glory, which to all of them seems beautiful and reasonable….One after another, they rush to demonstrate their non-entity to him….Not only is he great, but his ancestors, his brothers, his stepsons, his brothers-in-law are great.”

Turning a Blind Eye:

“The ideal of glory and greatness which consists not only in considering that nothing that one does is bad, but in being proud of one’s every crime, ascribing some incomprehensible supernatural meaning to it – that ideal which is to guide this man and the people connected with him, is freely developed…His childishly imprudent, groundless and ignoble (actions)…leave his comrades in trouble…completely intoxicated by the successful crimes he has committed…”

Self-Adoration, Mobs, and Conspiracy:

“He has no plan at all; he is afraid of everything…He alone, with his ideal of glory and greatness…with his insane self-adoration, with his boldness in crime, with his sincerity in lying – he alone can justify what is to be performed…He is drawn into a conspiracy, the purpose of which is the seizure of power, and the conspiracy is crowned with success….”

The Spell is Broken by a Reversal of Chance:

“But suddenly, instead of the chances and genius that up to now have led him so consistently through an unbroken series of successes to the appointed role, there appear a countless number of reverse chances….and instead of genius there appears an unexampled stupidity and baseness…”

The Final Act – Biden Anoints Kamala:

“A countermovement is performed…And several years go by during which this man, in solitude on his island, plays a pathetic comedy before himself, pettily intriguing and lying to justify his actions, when that justification is no longer needed, and showing to the whole world what it was that people took for strength while an unseen hand was guiding him…having finished the drama and undressed the actor.”

As both Trump and Vance are learning the hard way, celebrity in America is a double-edged sword. In an inaugural speech, prosecutor met defendants head on.

“I took on perpetrators of all kinds. Predators who abused women, fraudsters who ripped off consumers, cheaters who broke the rules for their own gain. So hear me when I say, I know Donald Trump’s ( and JD Vance’s) type.”

Kamala Harris # understands the assignment.

Tags: Germaine de Stael > JD Vance > Joe Biden > Kamala Harris > Le Chateau de Coppet > Leo Tolstoy > Napoleon > trup > war and peace