“Mr. Trump, I was Superman. You are no Superman!”

Posted on | June 3, 2024 | 2 Comments

With Christopher Reeve/ Sept.25, 2002

Mike Magee

As we approach the 20th anniversary of the death of Christopher Reeve, I’m drawn back to the evening of September 25, 2002, and a private conversation in a back room off the ballroom of the Marriott Marquis Hotel. As we awaited the ceremonial beginnings of the Christopher Reeve Paralysis Foundation Benefit Gala that evening, he said, “What I didn’t expect was that in this country, home of ‘Truth, Justice and the American way,’ hope would be determined by politics.”

That sentiment was, no doubt, fresh in his mind, having just appeared in his book, “Nothing Is Impossible: Reflections On A New Life” (Random House), a week earlier. And it was top of mind last week while (with millions of other Americans) I awaited a verdict in the New York trial of Donald Trump.

A month earlier, Smithsonian Magazine had run a feature on the first issue of the Superman comic book. The original copy of the 1938 “Action Comics No. 1” had just sold for $6 million at auction. A large part of that value tracked back to Chris Reeve himself – the enduring image and voice of Superman – a genuine American hero.

The famous slogan, “Truth, Justice, and the American Way”, however did not appear in that first publication. It surfaced later, in the early 1940’s comic books , written by Jerry Siegel and Joe Shuster, “to cheer on American military efforts in World War II.” Its use waxed and waned over the next three decades until 1978. That’s when the Richard Donner film “Superman: The Movie” was released starring Christopher Reeve. As the Superman Homepage News acknowledges, it was thanks to Reeve’s performance that “the ‘Truth, Justice and The American Way’ motto was really cemented in popular culture for generations to come.”

In a controversial move, at the DC FanDome on October 21, 2021, DC Publisher Jim Lee announced that Superman’s motto “Truth, Justice and the American Way” would be evolving. “The American Way” would now be replaced by “a Better Tomorrow.” A press statement elaborated that the move was made “to better reflect the storylines that we are telling across DC and to honor Superman’s incredible legacy of over 80 years of building a better world.” Rolling Stone was given a slightly different spin by DC Comics which said, “Superman has long been a symbol of hope who inspires people from around the world, and it is that optimism and hope that powers him forward.”

Whether commercial, philosophical or political in motivation, now two years later, as Trump self declares his own “Superman-status” its worth contrasting two very different versions of “the American way.” As NewYork Magazine reported in 2012, “Among the many laughably unrealistic images in the Donald Trump NFT collection, one stood out: the illustration of the former president in the classic Superman pose, ripping open his dress shirt to reveal a superhero costume underneath. Trump used this image, which was animated to show lasers shooting out of his eyes, to tease a ‘major announcement’ on December 15, which turned out to be a collection of 45,000 digital trading cards. ‘America needs a superhero!’ Trump proclaimed.”

Mirroring VP candidate Lloyd Bentsen’s reply to VP Dan Quayle’s comparison of himself to JFK (“Senator, I served with Jack Kennedy. I knew Jack Kennedy. Jack Kennedy was a friend of mine. Senator, you’re no Jack Kennedy.”), Christopher Reeve (were he with us today) could easily reply to our criminally convicted former president, “Mr. Trump, I was Superman. You are no Superman!”

And yet, Superman’s slogan is very much “in play.” Trump counselor Kellyanne Conway took a wrecking ball to its first word, “truth”, when she claimed the legitimacy of “alternate facts” on a Meet the Press interview in 2017. As for “justice,” the Washington Post, on June 2nd, ran this headline: “Trump’s attacks on US justice system after his conviction could be used by autocrats, say experts.” And that leaves only “The American Way?”

James Madison, in Federalist No. 51, wrote, “Justice is the end (the anchor) of government. It is the end of civil society. It ever has been, and ever will be pursued until it is obtained, or until liberty be lost in the pursuit.”

Historian John J. Patrick has made a compelling argument that democracy is the American way and is “a never ending quest to narrow the gap between lofty ideals and flawed realities…” But using Professor Patrick’s measures, Trump’s “American Way” is miles apart from Christopher Reeve’s ideal as we knew it.

Trump’s destructive actions, borrowing from Professor Patrick, include:

“Governing ineptly because the most able persons are not selected to rule.”

“Making unwise decisions in government by pandering to public opinion.”

“Eroding political and social authority and unity by encouraging criticism and dissent.”

“Encouraging abuse or disregard of unpopular persons or opinions.”

“Failing to achieve its ideals or to adhere to its basic principles.”

Twenty years ago, at the height of an election season, on October 10, 2004, Christopher Reeve died peacefully with loving family members at his side. He never felt sorry for himself or blamed anyone. As he said, “Some people are walking around with full use of their bodies and they’re more paralyzed than I am.”

As for being a hero himself, his insights were prescient and highly relevant to the current threat to our democracy. He said, “What makes Superman a hero is not that he has power, but that he has the wisdom and the maturity to use the power wisely.”

Tags: alternate facts > Christopher Reeves > Dan Quayle > Donald Trump > Federalist No.51 > John J. Patrick > justice and the American Way > kellyanne conway > Lloyd Bentsen > superman > truth

“Justice delayed is justice denied . . . but not forever.”

Posted on | May 29, 2024 | Comments Off on “Justice delayed is justice denied . . . but not forever.”

Mike Magee

The date was March 16, 1868. The speaker was a British statesman and former Prime Minister, William Evert Gladstone. In a dispute that day, during vigorous debate in Parliament, Gladstone, in response to supportive cheers from colleagues said , “But above all, if we be just men, we shall go forward in the name of truth and right, and bear this in mind, that when the case is ripe and the hour has come, justice delayed is justice denied.”

These words ring loud and clear this week for millions of Americans, as they await a verdict in the criminal trial of Donald Trump in New York. Whatever one’s political persuasion, most agree that the presiding judge, Juan Merchan, has been highly competent and efficient in orchestrating the historic trial and avoiding over-reacting to Trump’s labeling the judge “corrupt” and “conflicted.”

Judge Merchant has been a Justice of the New York Supreme Court since 2009. An experienced and balanced jurist, he has earned the respect of defense attorneys like New York lawyer Ron Kuby who described him as “a serious jurist, smart and even tempered…a no-nonsense judge…always in control of the courtroom.”

Contrast this with the evaluations of Judge Aileen M. Cannon who recently issued a “curious order” indefinitely delaying a date for Tump’s classified document case after holding seven public hearings during the past 10 months. Her performance has caused legal analyst Alan Feuer, who has covered criminal justice for the New York Times since 1999, to comment, “The portrait that has emerged so far is that of an industrious but inexperienced and often insecure judge whose reluctance to rule decisively even on minor matters has permitted one of the country’s most important criminal cases to become bogged down in a logjam of unresolved issues.”

Needless to say, the contrast between the two judges is stark. The only thing they seem to have in common is that both were born in Columbia. Personalities and loyalties aside, each will forever be tied to Trump, and their ability to deliver or delay justice. This is because the majority view of Americans is that “fairness” and the “rule of law” require “timely and efficient justice systems.”

What friends of Judge Cannon must consider is whether “justice delayed or disrupted on one dimension may still find its resolution in another.” That is to say, her actions one way or another, have consequences, and could send the Trump narrative down any number of alleys that place the former President (and his family members) in even greater jeopardy. Witness for example the life journey of O.J. Simpson following his acquittal for the murder of Nicole Brown Simpson. Or Harvey Weinstein, freed on a technicality, only to be retrialed as more of his victims surface.

Purposeful delays in justice can boomerang on defendants. For example, the former President succeeded in stonewalling release of his tax returns for over a decade. But time ran out in 2024 when the IRS announced that he had “claimed massive financial losses twice” (in 2008 and 2012) and owed the government over $100 million. And that was just on a single holding – a Chicago skyscraper. Then there was the 2023 battery and defamation conviction for the 1990’s rape of E. Jean Carroll. The $5 million dollar judgement against him took nearly three decades to snare him in justice’s web. And in that same year, New York attorney general Letitia James convinced Justice Arthur Engoron that the Trump Organization was guilty of massive real estate fraud, and effectively revoked the license to operate New York based properties.

What we as a society are currently witnessing is that timely resolutions are central to maintaining “public trust.” People must move on with their lives, and can only do so if social order and public confidence in justice is maintained. Trump’s success with a strategy of delay seems to be waning. The current trials remind us that continuous improvement will always be the order of the day for our legal system. Access, efficiency, equity, and timely justice for all.

And should he somehow wiggle his way out of the current downtown New York holding cell, he and his protectors will soon enough learn that “Justice delayed is justice denied . . . but not forever.”

Tags: aileen cannon > arthur engoron > e. jean carroll > juan merchant > justice > o.j.simpson > trials > trump

AI Can Talk, But Can It Think?

Posted on | May 22, 2024 | Comments Off on AI Can Talk, But Can It Think?

Mike Magee

OpenAI says its new GPT-4o is “a step towards much more natural human-computer interaction,” and is capable of responding to your inquiry “with an average 320 millisecond (delay) which is similar to a human response time.” So it can speak human, but can it think human?

The “concept of cognition” has been a scholarly football for the past two decades, centered primarily on “Darwin’s claim that other species share the same ‘mental powers’ as humans, but to different degrees.” But how about genAI powered machines? Do they think?

The first academician to attempt to define the word “cognition” was Ulric Neisser in the first ever textbook of cognitive psychology in 1967. He wrote that “the term ‘cognition’ refers to all the processes by which the sensory input is transformed, reduced, elaborated, stored, recovered, and used. It is concerned with these processes even when they operate in the absence of relevant stimulation…”

The word cognition is derived from “Latin cognoscere ‘to get to know, recognize,’ from assimilated form of com ‘together’ + gnoscere ‘to know’ …”

Knowledge and recognition would not seem to be highly charged terms. And yet, in the years following Neisser’s publication there has been a progressively intense, and sometimes heated debate between psychologists and neuroscientists over the definition of cognition.

The focal point of the disagreement has (until recently) revolved around whether the behaviors observed in non-human species are “cognitive” in the human sense of the word. The discourse in recent years had bled over into the fringes to include the belief by some that plants “think” even though they are not in possession of a nervous system, or the belief that ants communicating with each other in a colony are an example of “distributed cognition.”

What scholars in the field do seem to agree on is that no suitable definition for cognition exists that will satisfy all. But most agree that the term encompasses “thinking, reasoning, perceiving, imagining, and remembering.” Tim Bayne PhD, a Melbourne based professor of Philosophy adds to this that these various qualities must be able to be “systematically recombined with each other,” and not be simply triggered by some provocative stimulus.

Allen Newell PhD, a professor of computer science at Carnegie Mellon, sought to bridge the gap between human and machine when it came to cognition when he published a paper in 1958 that proposed “a description of a theory of problem-solving in terms of information processes amenable for use in a digital computer.”

Machines have a leg up in the company of some evolutionary biologists who believe that true cognition involves acquiring new information from various sources and combining it in new and unique ways.

Developmental psychologists carry their own unique insights from observing and studying the evolution of cognition in young children. What exactly is evolving in their young minds, and how does it differ, but eventual lead to adult cognition? And what about the explosion of screen time?

Pediatric researchers, confronted with AI obsessed youngsters and worried parents are coming at it from the opposite direction. With 95% of 13 to 17 year olds now using social media platforms, machines are a developmental force, according to the American Academy of Child and Adolescent Psychiatry. The machine has risen in status and influence from a side line assistant coach to an on-field teammate.

Scholars admit “It is unclear at what point a child may be developmentally ready to engage with these machines.” At the same time, they are forced to admit that the technology tidal waves leave few alternatives. “Conversely, it is likely that completely shielding children from these technologies may stunt their readiness for a technological world.”

Bence P Ölveczky, an evolutionary biologist from Harvard, is pretty certain what cognition is and is not. He says it “requires learning; isn’t a reflex; depends on internally generated brain dynamics; needs access to stored models and relationships; and relies on spatial maps.”

Thomas Suddendorf PhD, a research psychologist from New Zealand, who specializes in early childhood and animal cognition, takes a more fluid and nuanced approach. He says, “Cognitive psychology distinguishes intentional and unintentional, conscious and unconscious, effortful and automatic, slow and fast processes (for example), and humans deploy these in diverse domains from foresight to communication, and from theory-of-mind to morality.”

Perhaps the last word on this should go to Descartes. He believed that humans mastery of thoughts and feelings separated them from animals which he considered to be “mere machines.”

Were he with us today, and witnessing generative AI’s insatiable appetite for data, its’ hidden recesses of learning, the speed and power of its insurgency, and human uncertainty how to turn the thing off, perhaps his judgement of these machines would be less disparaging; more akin to Mira Murati, OpenAI’s chief technology officer, who announced with some degree of understatement this month, “We are looking at the future of the interaction between ourselves and machines.”

Tags: allen newell > Bence Olveczky > cognition > cognitive psychology > Descartes > developmental biology > evolutionary biology > GPT-4o > Mira Murati > neuroscience > OpenAI > Tim Bayne

May 17, 2024 Address at Presidents College/U. of Hartford: “Artificial Intelligence (AI) and The Future of American Medicine.

Posted on | May 18, 2024 | Comments Off on May 17, 2024 Address at Presidents College/U. of Hartford: “Artificial Intelligence (AI) and The Future of American Medicine.

Artificial Intelligence (AI) and the Future of American Medicine

Link here: https://www.healthcommentary.org/about/artificial-intelligence-ai-and-the-future-of-medicine/

GPT-4o: “From babble to concordance to inclusivity…”

Posted on | May 14, 2024 | 3 Comments

Mike Magee

If you follow my weekly commentary on HealthCommentary.org or THCB, you may have noticed over the past 6 months that I appear to be obsessed with mAI, or Artificial Intelligence intrusion into the health sector space.

So today, let me share a secret. My deep dive has been part of a long preparation for a lecture (“AI Meets Medicine”) I will deliver this Friday, May 17, at 2:30 PM in Hartford, CT. If you are in the area, it is open to the public. You can register to attend HERE.

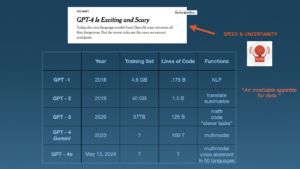

The image above is a portion of one of 80 slides I will cover over the 90 minute presentation on a topic that is massive, revolutionary, transformational and complex. It is also a moving target, as illustrated in the final row above which I added this morning.

The addition was forced by Mira Murati, OpenAI’s chief technology officer, who announced from a perch in San Francisco yesterday that, “We are looking at the future of the interaction between ourselves and machines.”

The new application, designed for both computers and smart phones, is GPT-4o. Unlike prior members of the GPT family, which distinguished themselves by their self-learning generative capabilities and an insatiable thirst for data, this new application is not so much focused on the search space, but instead creates a “personal assistant” that is speedy and conversant in text, audio and image (“multimodal”).

OpenAI says this is “a step towards much more natural human-computer interaction,” and is capable of responding to your inquiry “with an average 320 millisecond (delay) which is similar to a human response time.” And they are fast to reinforce that this is just the beginning, stating on their website this morning “With GPT-4o, we trained a single new model end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network. Because GPT-4o is our first model combining all of these modalities, we are still just scratching the surface of exploring what the model can do and its limitations.”

It is useful to remind that this whole AI movement, in Medicine and every other sector is about language. And as experts in language remind us, “Language and speech in the academic world are complex fields that go beyond paleoanthropology and primatology,” requiring a working knowledge of “Phonetics, Anatomy, Acoustics and Human Development, Syntax, Lexicon, Gesture, Phonological Representations, Syllabic Organization, Speech Perception, and Neuromuscular Control.”

The notion of instantaneous, multimodal communication with machines has seemingly come of nowhere but is actually the product of nearly a century of imaginative, creative and disciplined discovery by information technologists and human speech experts, who have only recently fully converged with each other. As paleolithic archeologist, Paul Pettit, PhD, puts it, “There is now a great deal of support for the notion that symbolic creativity was part of our cognitive repertoire as we began dispersing from Africa.” That is to say, “Your multimodal computer imagery is part of a conversation begun a long time ago in ancient rock drawings.”

Throughout history, language has been a species accelerant, a secret power that has allowed us to dominate and rise quickly (for better or worse) to the position of “masters of the universe.” The shorthand: We humans have moved “From babble to concordance to inclusivity…”

GPT-4o is just the latest advance, but is notable not because it emphasizes the capacity for “self-learning” which the New York Times correctly bannered as “Exciting and Scary,” but because it is focused on speed and efficiency in the effort to now compete on even playing field with human to human language. As OpenAI states, “GPT-4o is 2x faster, half the price, and has 5x higher (traffic) rate limits compared to GPT-4.”

Practicality and usability are the words I’d chose. In the companies words, “Today, GPT-4o is much better than any existing model at understanding and discussing the images you share. For example, you can now take a picture of a menu in a different language and talk to GPT-4o to translate it, learn about the food’s history and significance, and get recommendations.”

In my lecture, I will cover a great deal of ground, as I attempt to provide historic context, relevant nomenclature and definitions of new terms, and the great potential (both good and bad) for applications in health care. As many others have said, “It’s complicated!”

But as this week’s announcement in San Francisco makes clear, the human-machine interface has blurred significantly. Or as Mira Murati put it, “You want to have the experience we’re having — where we can have this very natural dialogue.”

Tags: artificial intelligence > CPT-4o > health care > language > mAI > Mira Murati > multimodal > OpenAI

Justice Amy Coney Barrett Has a Mortal Sin On Her Conscience.

Posted on | May 8, 2024 | 2 Comments

Mike Magee

Justice Amy Coney Barrett, Catholic and conservative, likely found this week’s Health Affairs article on violence toward women in Red states where abortion was banned uncomfortable reading. Its authors state that the “rapidly increasing passage of state legislation has restricted or banned access to abortion care” and has triggered an increase in “intimate partner violence” in girls 10 to 44. Authors were able to document that each targeted action to restrict access to abortion providers delivered a 3.4% rise in violence against these pregnant girls and women.

It is not as if the Supreme Court judges weren’t warned that their ruling would immediately trigger reactivation of century old laws designed to reinforce second class citizenship of women. Nor were they unaware that red state captive legislatures would likely go “fast and furious” in pursuit of fetal “personhood” regardless of the potential loss of life and limb to the mothers. And yet, faced with radical evangelical leaders directed attacks on IVF, even conservative justices (excepting Clarence Thomas) seemed genuinely surprised.

For experts in maternal-fetal health, the findings come as no surprise. A landmark study in the BMC Medicine in 2014 found that up to 22% of women seeking abortion were the victims of intimate partner violence. The study concluded that “Policies restricting abortion provision may result in more women being unable to terminate unwanted pregnancies, potentially keeping them in contact with violent partners, and putting women and their children at risk.”

It is not as if “Intimate Partner Violence” is uncommon in the U.S. A New England Journal of Medicine article, published four months after the Dobbs decision was handed down, documented that “Overall, one in three women in the United States experiences contact sexual violence, physical violence, or stalking by an intimate partner (or a combination of these) at some point, with higher rates among women in historically marginalized racial or ethnic groups.”

That data was available on government websites well before the Justices announced the Dobbs decision on June 24, 2022. Red states already possessed unfavorable demographics when it came to violence against women. Liberal policies toward guns, combined with sub-standard safety nets in this states, have created a toxic health environment for young women. As data supports, “Most vulnerable in this new legal landscape will be people who have limited access to resources and services and inadequate protection against violence, especially those living in overburdened communities — primarily young, low-income women from historically marginalized racial or ethnic groups…The majority of Intimate Partner Violence related homicides involve firearms.”

One must wonder whether Justice Amy Coney Barrett, the only conservative woman on the Court, second guessed herself when she read the November article predictions of the fallout of Dobbs. It read, “Legal restrictions on reproductive health care and access to abortion will leave people more vulnerable to control by their abusers. Policies permitting easier access to firearms, including the ability to carry guns in public, will further jeopardize survivors’ safety.”

With Justice Barrett’s support, the Supreme Court had voted on two successive days in favor of less restricted access to guns, and more restricted access to abortion. In New York State Rifle & Pistol Association v. Bruen, decided one day before the Dobbs case, Barrett and conservatives struck down New York’s requirement that its citizens declare a “special need for self-defense” to carry a handgun outside the home. Justice Stephen Breyer, in his dissent the following day, made clear the carnage that lay ahead. He cited the fact that “women are five times as likely to be killed by an intimate partner if the partner has access to a gun.”

If Justice Barrett is unable to somehow make this right in the future, she will be left (as we Catholics like to say) to carry this sin on her conscience. And to be clear, for the women of America, this sin is “mortal” not “venial.” Stipulations for a “mortal sin”, according to the Catholic religion are:

1. “Serious or grave matter. This often means that the action is clearly evil or severely disordered.”

2. “Sufficient knowledge or reflection. This means we know we are committing a sinful act and that we have had sufficient reflection for it to be intentional.”

3. “Full consent of the will. This means that we must freely choose to commit a grave sin in order for it to be a mortal sin.”

Tags: amy coney barrett > banned abortions > BMC Medicine > bruen case > catholicism > conservatives > Dobbs > health affairs > mortal sin > supreme court > venial sin > womens health care

Will AI Revolutionize Surgical Care? Yes, But Maybe Not How You Think.

Posted on | May 2, 2024 | 2 Comments

Mike Magee

If you talk to consultants about AI in Medicine, it’s full speed ahead. GenAI assistants, “upskilling” the work force, reshaping customer service, new roles supported by reallocation of budgets, and always with one eye on “the dark side.”

But one area that has been relatively silent is surgery. What’s happening there? In June, 2023, the American College of Surgeons (ACS) weighed in with a report that largely stated the obvious. They wrote, “The daily barrage of news stories about artificial intelligence (AI) shows that this disruptive technology is here to stay and on the verge of revolutionizing surgical care.”

Their summary self-analysis was cautious, stating: “By highlighting tools, monitoring operations, and sending alerts, AI-based surgical systems can map out an approach to each patient’s surgical needs and guide and streamline surgical procedures. AI is particularly effective in laparoscopic and robotic surgery, where a video screen can display information or guidance from AI during the operation.”

It is increasingly obvious that the ACS is not anticipating an invasion of robots. In many ways, this is understandable. The operating theater does not reward hyperbole or flashy performances. In an environment where risk is palpable, and simple tremors at the wrong time, and in the wrong place, can be deadly, surgical players are well-rehearsed and trained to remain calm, conservative, and alert members of the “surgical team.”

Johnson & Johnson’s AI surgery arm, MedTech, brands surgeons as “high-performance athletes” who are continuous trainers and learners…but also time-constrained “busy surgeons.” The heads of their AI business unit say that they want “to make healthcare smarter, less invasive, more personalized and more connected.” As a business unit, they decided to focus heavily of surgical education. “By combining a wealth of data stemming from surgical procedures and increasingly sophisticated AI technologies, we can transform the experience of patients, doctors and hospitals alike. . . When we use AI, it is always with a purpose.”

The surgical suite is no stranger to technology. Over the past few decades, lasers, laparoscopic equipment, microscopes, embedded imaging, all manner of alarms and alerts, and stretcher-side robotic work stations have become commonplace. It’s not like mAI is ACS’s first tech rodeo.

Mass General surgeon, Jennifer Eckhoff, MD, sees the movement in broad strokes. “Not surprisingly, the technology’s biggest impact has been in the diagnostic specialties, such as radiology, pathology, and dermatology.” University of Kentucky surgeon, Danielle Walsh MD also chose to look at other departments. “AI is not intended to replace radiologists. – it is there to help them find a needle in a haystack.” But make no mistake, surgeons are aware that change is on the way. University of Minnesota surgeon, Christopher Tignanelli, MD’s, view is that the future is now. He says, “AI will analyze surgeries as they’re being done and potentially provide decision support to surgeons as they’re operating.”

AI robotics as a challenger to their surgical roles, most believe, is pure science fiction. But as a companion and team member, most see the role of AI increasing, and increasing rapidly in the O.R. The greater the complexity, the more the need. As Mass General’s Eckoff says, “Simultaneously processing vast amounts of multimodal data, particularly imaging data, and incorporating diverse surgical expertise will be the number one benefit that AI brings to medicine. . . Based on its review of millions of surgical videos, AI has the ability to anticipate the next 15 to 30 seconds of an operation and provide additional oversight during the surgery.”

As the powerful profit center for most hospitals, dollars are likely to keep up with visioning as long as the “dark side of AI” is kept at bay. That includes “guidelines and guardrails” as outlined by new, rapidly forming elite academic AI collaboratives, like the Coalition for Health AI. Quality control, acceptance of liability and personal responsibility, patient confidence and trust, are all prerequisite. But the rewards, in the form of diagnostics, real-time safety feedback, precision and tremor-less technique, speed and efficient execution, and improved outcomes likely will more than make up for the investment in time, training, and dollars.

Tags: ACS > AI > AI in Surgery > Christopher Tignanelli MD > Coalition for Health AI > Danielle Walsh MD > Healthcare Technology > J&J > jennifer Eckhoff MD > MedTech