GPT-4o: “From babble to concordance to inclusivity…”

Posted on | May 14, 2024 | 3 Comments

Mike Magee

If you follow my weekly commentary on HealthCommentary.org or THCB, you may have noticed over the past 6 months that I appear to be obsessed with mAI, or Artificial Intelligence intrusion into the health sector space.

So today, let me share a secret. My deep dive has been part of a long preparation for a lecture (“AI Meets Medicine”) I will deliver this Friday, May 17, at 2:30 PM in Hartford, CT. If you are in the area, it is open to the public. You can register to attend HERE.

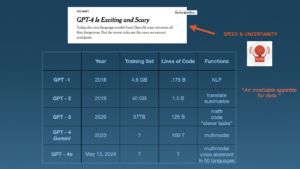

The image above is a portion of one of 80 slides I will cover over the 90 minute presentation on a topic that is massive, revolutionary, transformational and complex. It is also a moving target, as illustrated in the final row above which I added this morning.

The addition was forced by Mira Murati, OpenAI’s chief technology officer, who announced from a perch in San Francisco yesterday that, “We are looking at the future of the interaction between ourselves and machines.”

The new application, designed for both computers and smart phones, is GPT-4o. Unlike prior members of the GPT family, which distinguished themselves by their self-learning generative capabilities and an insatiable thirst for data, this new application is not so much focused on the search space, but instead creates a “personal assistant” that is speedy and conversant in text, audio and image (“multimodal”).

OpenAI says this is “a step towards much more natural human-computer interaction,” and is capable of responding to your inquiry “with an average 320 millisecond (delay) which is similar to a human response time.” And they are fast to reinforce that this is just the beginning, stating on their website this morning “With GPT-4o, we trained a single new model end-to-end across text, vision, and audio, meaning that all inputs and outputs are processed by the same neural network. Because GPT-4o is our first model combining all of these modalities, we are still just scratching the surface of exploring what the model can do and its limitations.”

It is useful to remind that this whole AI movement, in Medicine and every other sector is about language. And as experts in language remind us, “Language and speech in the academic world are complex fields that go beyond paleoanthropology and primatology,” requiring a working knowledge of “Phonetics, Anatomy, Acoustics and Human Development, Syntax, Lexicon, Gesture, Phonological Representations, Syllabic Organization, Speech Perception, and Neuromuscular Control.”

The notion of instantaneous, multimodal communication with machines has seemingly come of nowhere but is actually the product of nearly a century of imaginative, creative and disciplined discovery by information technologists and human speech experts, who have only recently fully converged with each other. As paleolithic archeologist, Paul Pettit, PhD, puts it, “There is now a great deal of support for the notion that symbolic creativity was part of our cognitive repertoire as we began dispersing from Africa.” That is to say, “Your multimodal computer imagery is part of a conversation begun a long time ago in ancient rock drawings.”

Throughout history, language has been a species accelerant, a secret power that has allowed us to dominate and rise quickly (for better or worse) to the position of “masters of the universe.” The shorthand: We humans have moved “From babble to concordance to inclusivity…”

GPT-4o is just the latest advance, but is notable not because it emphasizes the capacity for “self-learning” which the New York Times correctly bannered as “Exciting and Scary,” but because it is focused on speed and efficiency in the effort to now compete on even playing field with human to human language. As OpenAI states, “GPT-4o is 2x faster, half the price, and has 5x higher (traffic) rate limits compared to GPT-4.”

Practicality and usability are the words I’d chose. In the companies words, “Today, GPT-4o is much better than any existing model at understanding and discussing the images you share. For example, you can now take a picture of a menu in a different language and talk to GPT-4o to translate it, learn about the food’s history and significance, and get recommendations.”

In my lecture, I will cover a great deal of ground, as I attempt to provide historic context, relevant nomenclature and definitions of new terms, and the great potential (both good and bad) for applications in health care. As many others have said, “It’s complicated!”

But as this week’s announcement in San Francisco makes clear, the human-machine interface has blurred significantly. Or as Mira Murati put it, “You want to have the experience we’re having — where we can have this very natural dialogue.”

Tags: artificial intelligence > CPT-4o > health care > language > mAI > Mira Murati > multimodal > OpenAI

Comments

3 Responses to “GPT-4o: “From babble to concordance to inclusivity…””

May 15th, 2024 @ 4:28 am

Hey Mike.

It seems that every time I read another one of your articles I find myself repeating the signature commentary of actor Peter Boyle’s portrayal of Frank Barone “Holy Crap!”. You are a wonder my friend.

Sadly there is no way I can be in Hartford to attend your lecture. Is there any way I can get a copy?

Thanks, and my best to you and Pat. I wish life had allowed us to remain closer because I think our kids would have liked each other, but it just was not to be. Take care my friend.

May 15th, 2024 @ 10:10 am

Thanks for this, Larry. Always appreciate your words of encouragement – which now date back nearly 60 years. A copy of this address will be available following Friday’s presentation. I’ll send along the link once it is available. And I still hold promise we will meet again in person before our final performances. Best, Mike

May 18th, 2024 @ 9:57 am

As promised, here is the link to the may 17, 2024 speech at Presidents College at the University of Hartford:

https://www.healthcommentary.org/about/artificial-intelligence-ai-and-the-future-of-medicine/